Query MongoDB using SQL with Presto

With an increasing number of specialized databases, each having their own query languages, data analysts have a hard time to combine data from multiples sources. To mitigate this issue, Facebook created Presto, a high performance, distributed SQL query engine for big data. I will be creating a small simulation using Metabase, a web based open-source BI solution to visualize data from MongoDB.

Docker

Docker is a container platform. Containers package software applications and all their dependencies, which helps enable reproducible infrastructure. Docker Hub contains many container images so we have a starting point and don’t have to package everything ourselves. This is extremely helpful, specially during development.

Each container should only have one function. For example, a simple scenario when doing back-end development is to use a container for the application and another for the database. To connect the containers in a local environment, Docker Compose is the easiest solution but for production, the recommended approach is to use an orchestration system like Kubernetes.

Presto

Following the rise of NoSQL databases, many specialized query languages exist today. This leads to huge data integration efforts and expensive ETL processes. In the Hadoop world, a number of solutions emerged to enable the usage of SQL to retrieve data, Presto being the most interesting in my opinion.

The main advantage of Presto is that it has many data connectors, such as Kafka, Cassandra, Elasticsearch, MongoDB, Postgres, etc… It is then able to infer the schema automatically and handle semi-structured data. Arrays, nested objects, multi-database joins and the regular SQL operations are all supported.

Apache Drill is a very similar alternative to Presto, however it appears to be less popular and doesn’t support as many data sources. Performance comparisons are out of the scope for this post, but Presto is used by big players in data-intensive environments. Amazon created Athena which is based on Presto and heavily integrated in AWS. There is another recent popular Presto fork called Trino.

Metabase

There is a lot of commercial Business Intelligence software out there. Metabase stands out for being an open-source BI technology that is easy to use even for people that don’t know SQL, allowing them to explore the data and create web dashboards. Some advanced visualizations still require SQL knowledge.

Other alternatives include Redash and Superset. From my research, these support more chart types but are less user friendly and deployment is a bit more complicated.

MongoDB

MongoDB is a document-oriented database. Documents are stored as JSON objects, making it a good choice for semi-structured data with a flexible schema. It does not support SQL out of the box, which makes it harder for analysts to extract data because they have to learn another query language.

Simulation

I created 4 containers, 2 for different databases, 1 for Presto and the last for Metabase. Application data should be stored in volumes. Configuration files can either be copied and stored as part of the image or we can follow the volume approach. On Windows, named volumes must be used in some cases because of file permission issues.

1 | version: '3.4' |

I’m including here some commands that I used for testing. JSON Generator is a very nice tool to create datasets. For more details about how to seed the databases and configure Presto, check the repository.

1 | docker exec -it mongo bash |

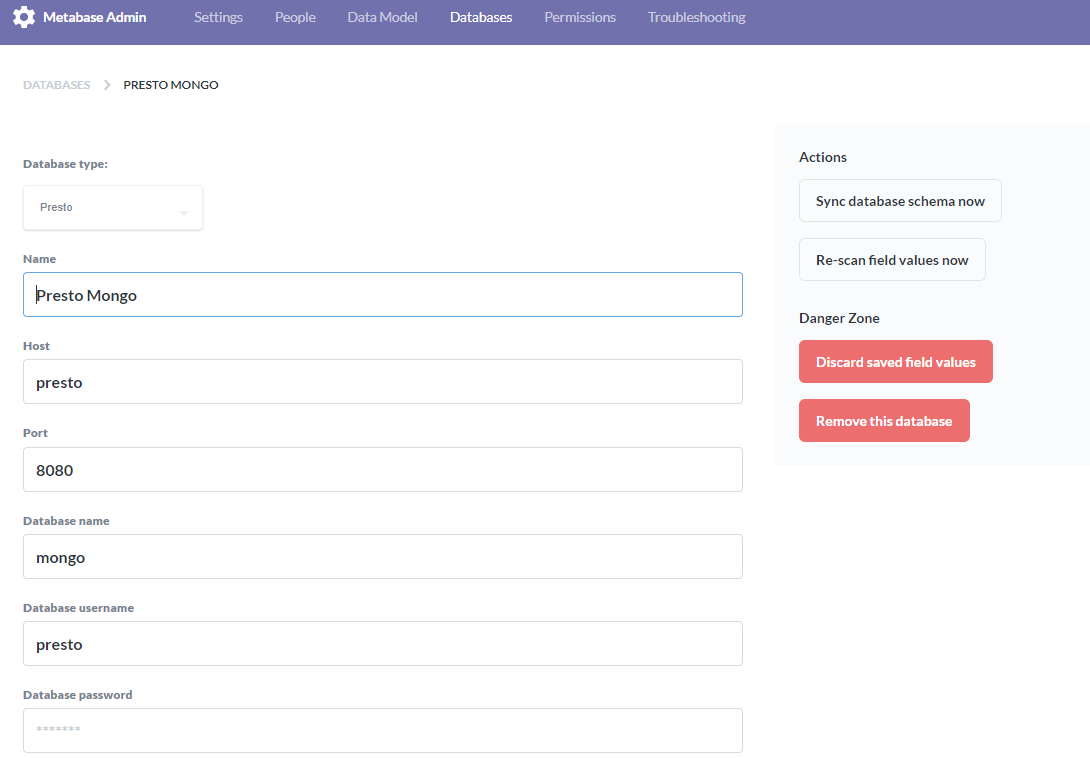

Metabase setup

Unlike the other tools, on Metabase there is no way to import/export dashboard configurations, other than copying the database it uses, which is H2 by default. For that reason I have included the data folder in the project repository.

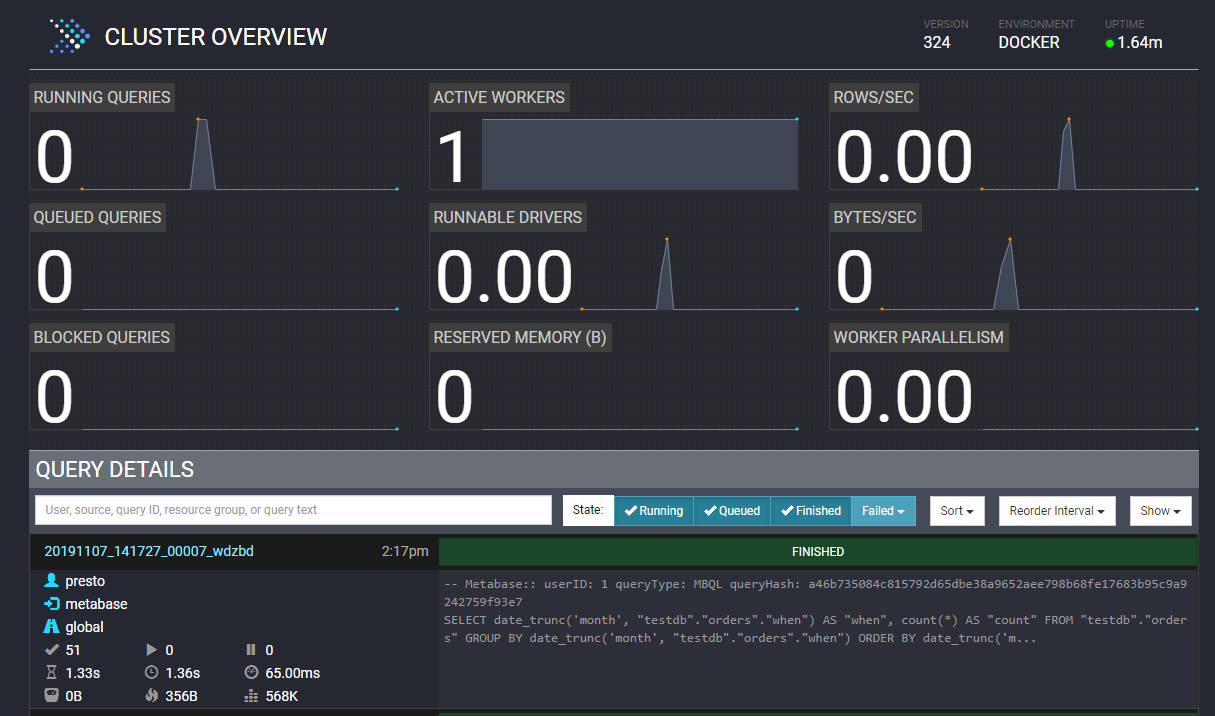

Presto UI

It is possible to see the queries that are executed by the Presto cluster. A distributed system is required to handle large amounts of data.

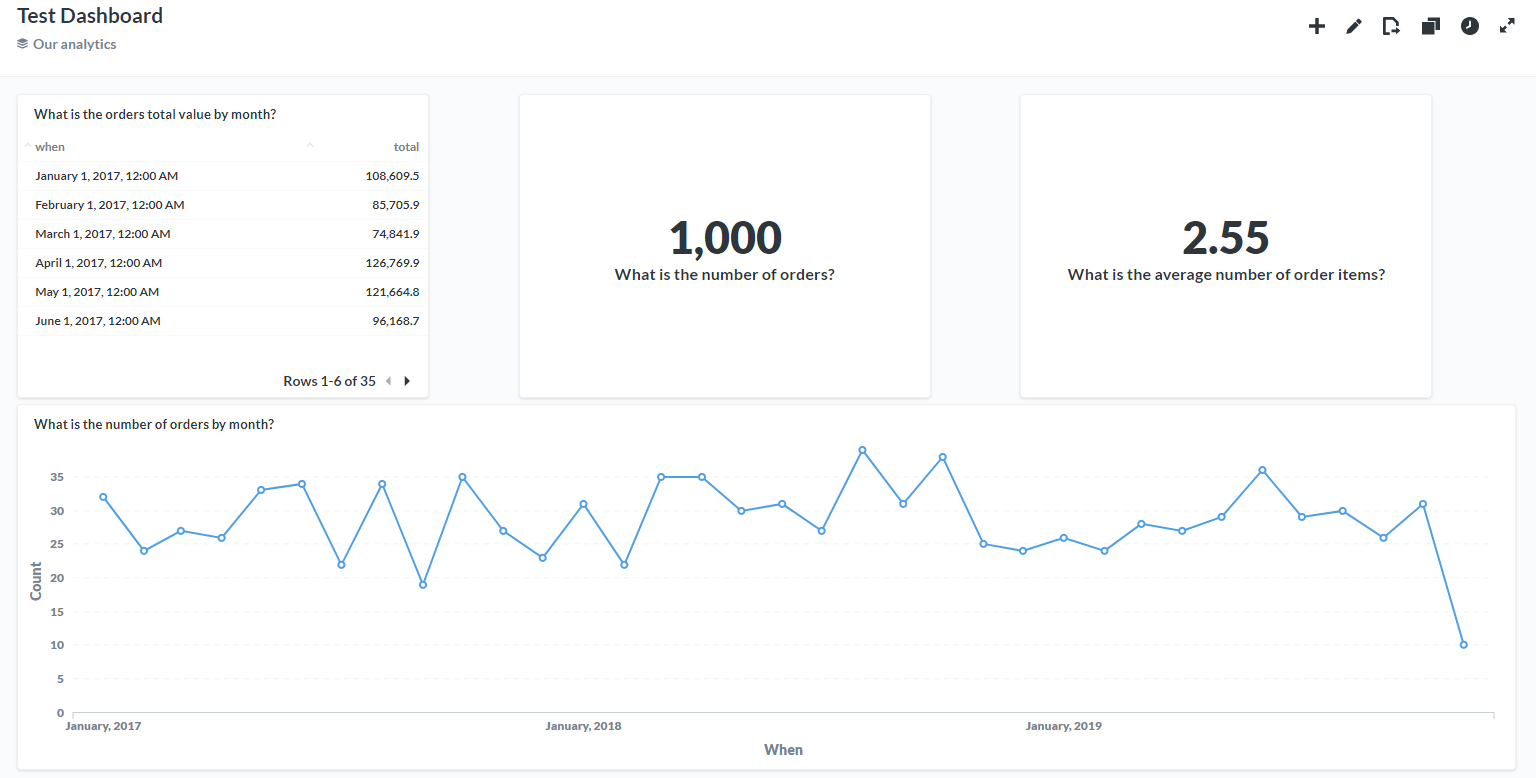

Dashboard

This final dashboard can be obtained by navigating to localhost:8000 after running docker-compose up. During the first manual setup I chose test[at]example.com as the username and test1234 for the password. Ideally this should be a configuration of the image or automatically setup when the container starts.

Closing thoughts

I recently had to create a web dashboard that needed data from multiple databases and these technologies allowed me to quickly build a quality prototype. Feel free to check the repository.