Azure Kubernetes Service Pipelines

In the last months I have been working with Azure DevOps and completed the Devops Engineer certification from Microsoft. Recently I’ve also been learning Kubernetes and wanted to build an end to end pipeline to deploy a sample Go application, with Ingress and TLS, to the managed Azure Kubernetes Service (AKS). I will describe the steps I’ve taken and share the GitHub repo with all the Infrastructure as Code (IaC) using Terraform.

Azure DevOps

Azure Devops is Microsoft’s solution to automate the software development lifecycle. It has the following services:

Azure Boards to manage work items, similar to Jira.

Azure Pipelines for CI/CD automation, like Jenkins or GitHub Actions.

Azure Repos is a repository service for Git or TFVC.

Azure Test Plans for manual testing (really expensive)

Azure Artifacts to publish packages, an alternative to Artifactory.

Using a SaaS instead of rolling your own comes at a cost. Pricing is a bit high at 5€/user and 12.65€/parallel job (for a self hosted agent), with 5 free users and 1 free job. For bigger enterprises, using self hosted agents instead of Microsoft hosted agents is usually the better option as parallel jobs are cheaper, builds should start faster, and you can make use of incremental builds, internal networking and managed identities. When choosing Azure Devops instead of other CI/CD tools, pricing has to be considered as the free tier limits are reached very soon and pipelines start to queue, however there is less infrastructure and code to manage.

Kubernetes

In recent years, Kubernetes became the standard to manage applications deployed as containers. It simplifies operations like high availability, autoscaling, rolling updates, rollbacks, service discovery, among others. Some of the most important concepts are:

Pods - groups of containers that share the same compute resources and network. Each pod is scheduled to run on nodes inside the cluster and gets assigned an IP address.

Deployments - describe the desired state of pods, like the number of replicas and rollout strategy.

Services - to expose Pods for consumption inside (ClusterIP) or outside (NodePort, LoadBalancer) the cluster, as a network service.

Kubectl is the command line interface to manage the cluster, which interacts with the Kubernetes API. Deploys can be performed with imperative commands, but declarative yaml manifests are the way to go for a reproducible environment.

When it comes to exposing services to the outside world, an alternative to using a Service of type LoadBalancer is to combine ClusterIP Services and an Ingress. A LoadBalancer Service per application results in a new public IP and public load balancer on the cloud, which can get expensive. An Ingress, such as ingress-nginx is often used to route HTTP and HTTPS traffic to internal services, based on the host header or request path, using a single public IP and cloud Load Balancer. With projects like external-dns and cert-manager, the management of DNS records and TLS certificates is fully automated.

Proof of concept

As I was following a course on Udemy, I’ve adapted some of the examples for a simple use case of deploying a Go application and having the entire infrastructure managed with Terraform. With the Azure Devops Provider and Azure Provider, I was able to automate most of the provisioning, only had to configure a few things manually in Azure DevOps (add the Kubernetes Environment, create the pipelines) and edit some variables in the pipeline declarations after having the resources provisioned. You would be able to reproduce everything by cloning the repo. I will only describe the global structure, as each file is easy to interpret:

1 | . |

I’ve opted to include the cert-manager and ingress-nginx manifests in the repo but they could be deployed with their GitHub URL or using Helm. The azure.json file has the details of the managed identity created by AKS, that also has the RBAC assignment to edit DNS records on the Azure DNS zone created by Terraform. To get the required IDs for the config file:

1 | # managed identity client id |

After deploying the resources, I’ve created the NS records aks.briefbytes.com pointing to the assigned Azure DNS nameservers to delegate this zone to Azure. A limitation of Azure Devops KubernetesManifest tasks required me to break the cert-manager manifest in 2 because it was not able to deploy Kubernetes objects in 2 different namespaces in the same task.

Terraform is responsible for creating the Kubernetes cluster, the container registry (ACR) to store images, the public dns zone, role assignments and configuring the project and service connections on Azure Devops. This is achieved by running the following:

1 | # this could also be on a pipeline using service principal or managed identity |

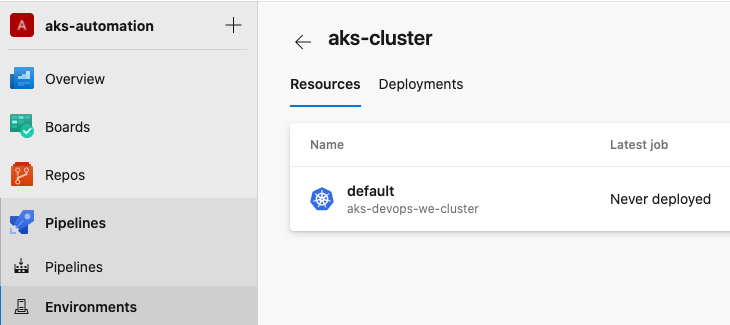

Adding an environment on Azure Devops will create another service connection and deployments from different pipelines will be visible there. This must be done manually, as the Terraform provider does not yet support it.

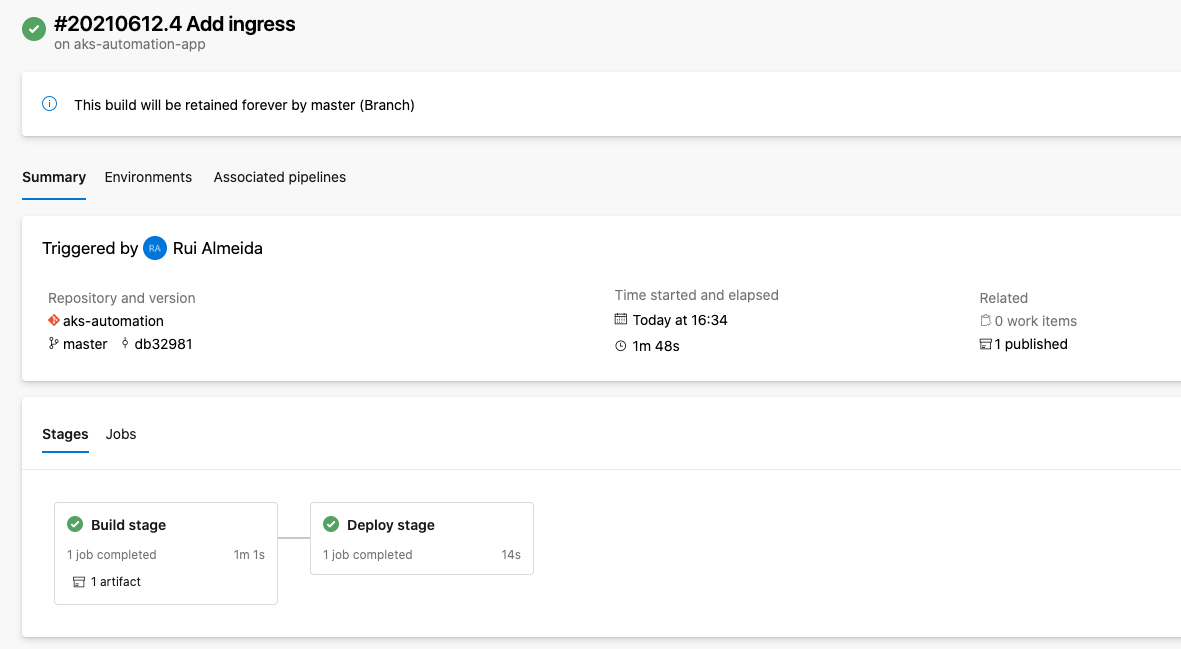

After adding the environment, make sure to create the pipeline and edit the ACR name, its service connection ID and of course set your own DNS zone. Your pipeline should run successfully:

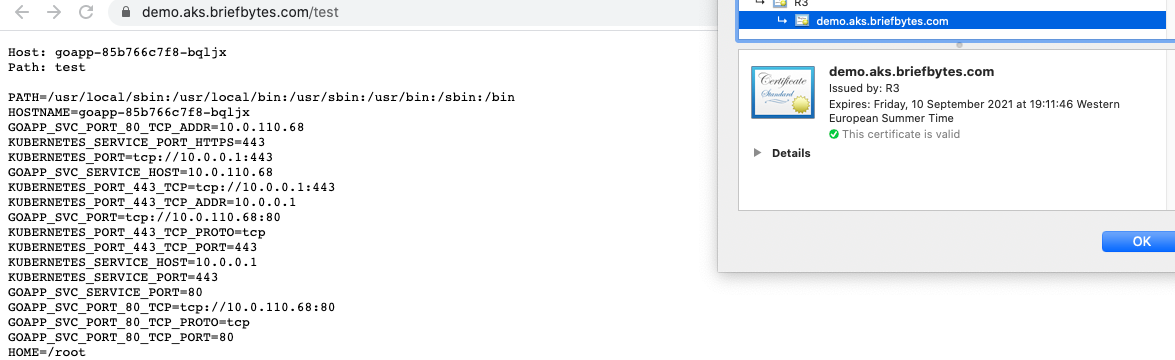

The creation of DNS records is fast but TLS certificates from Let’s Encrypt can take a few minutes to be issued. You can use the following commands to inspect the logs:

1 | kubectl logs -n cert-manager -f <kubectl-pod> |

Take care when changing domain names in production to avoid downtime. Do not forget about readiness and liveness checks on real apps to ensure high availability, as services may take a few seconds on startup and they may become unhealthy because of a bad connection.

The final result should look like this:

With this setup, when code is pushed or merged to the master branch, the pipeline will publish a new image if the unit tests pass and do the rolling update without downtime. To save on AKS costs, do not enable logging as Log Analytics is costly, however in production make sure to setup proper monitoring with Prometheus and logging with the Elastic stack or their alternatives. After testing, the cluster can be destroyed with Terraform or shutdown through Azure CLI:

1 | az extension add --name aks-preview |

Conclusion

The cloud and Kubernetes provide massive productivity, scalability and reliability improvements and their adoption will surely continue to grow. CRDs and custom operators enable k8s to manage more than containers, for example you can manage Kafka topics. There are so many more topics about Kubernetes and AKS that could be covered but I will finish this post with some useful docs: